AI has made it simpler than ever to range SEO quick– however with it comes high threat.

What takes place when the party mores than and temporary victories end up being long-term losses?

Lately, I collaborated with a site that had increased boldy utilizing AI-powered programmatic.

It worked up until it didn’t, causing a high decline in rankings, which I doubt they will recoup.

It’s unworthy the after effects when the party mores than. And, ultimately, it will be.

Below are the lessons learned along the road and ways to come close to points differently.

When the party finishes

The threats of AI-driven material approaches aren’t always instantly evident. In this case, the indicators were there long before rankings fell down.

This client concerned us after reading my last write-up on the influence of the August 2024 Google core upgrade.

Their outcomes were decreasing quickly, and they desired us to audit the site to drop some light on why this might be happening.

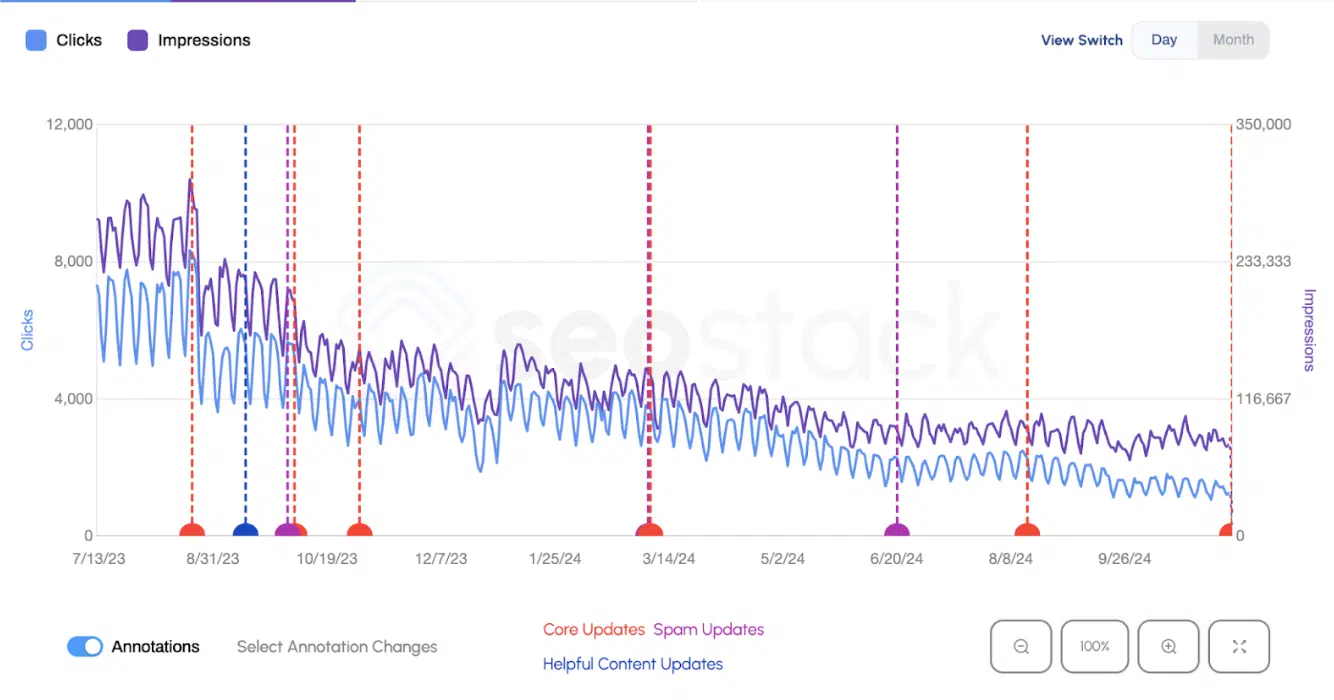

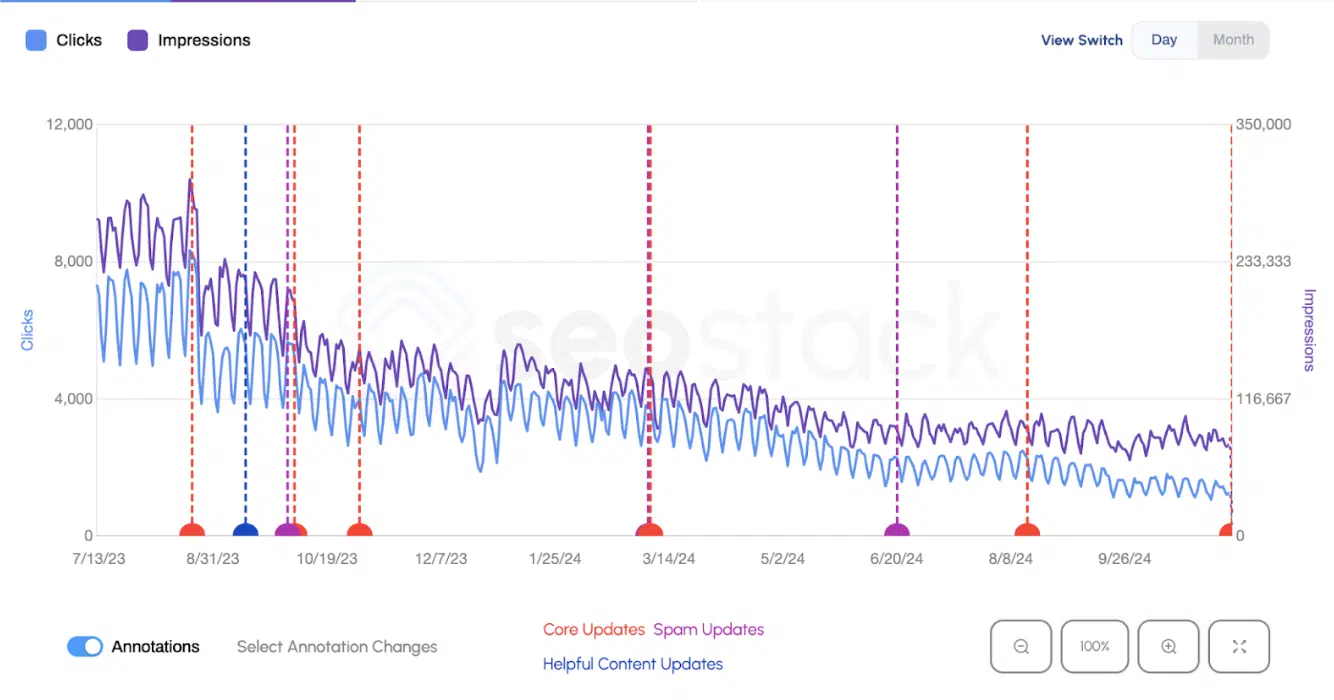

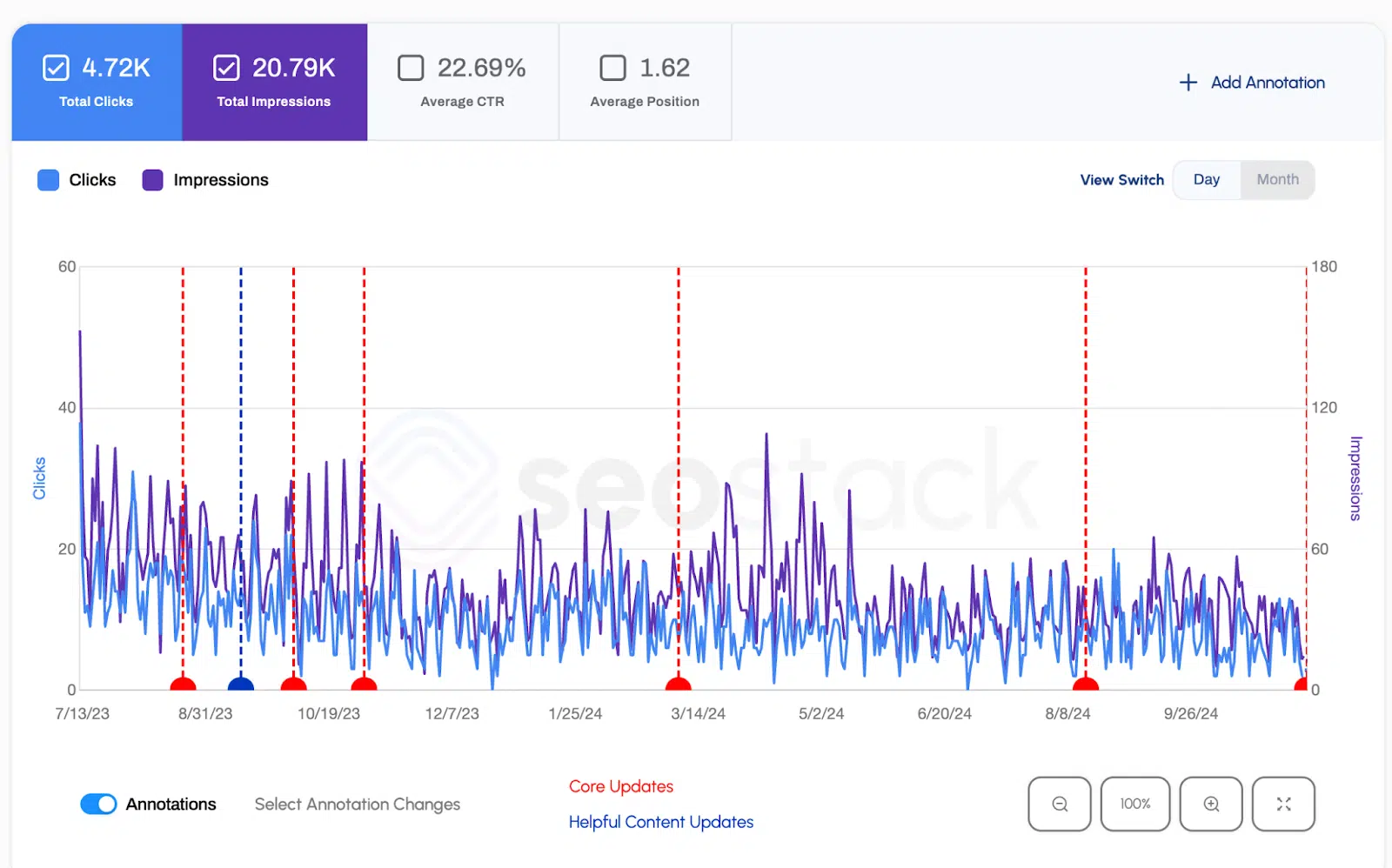

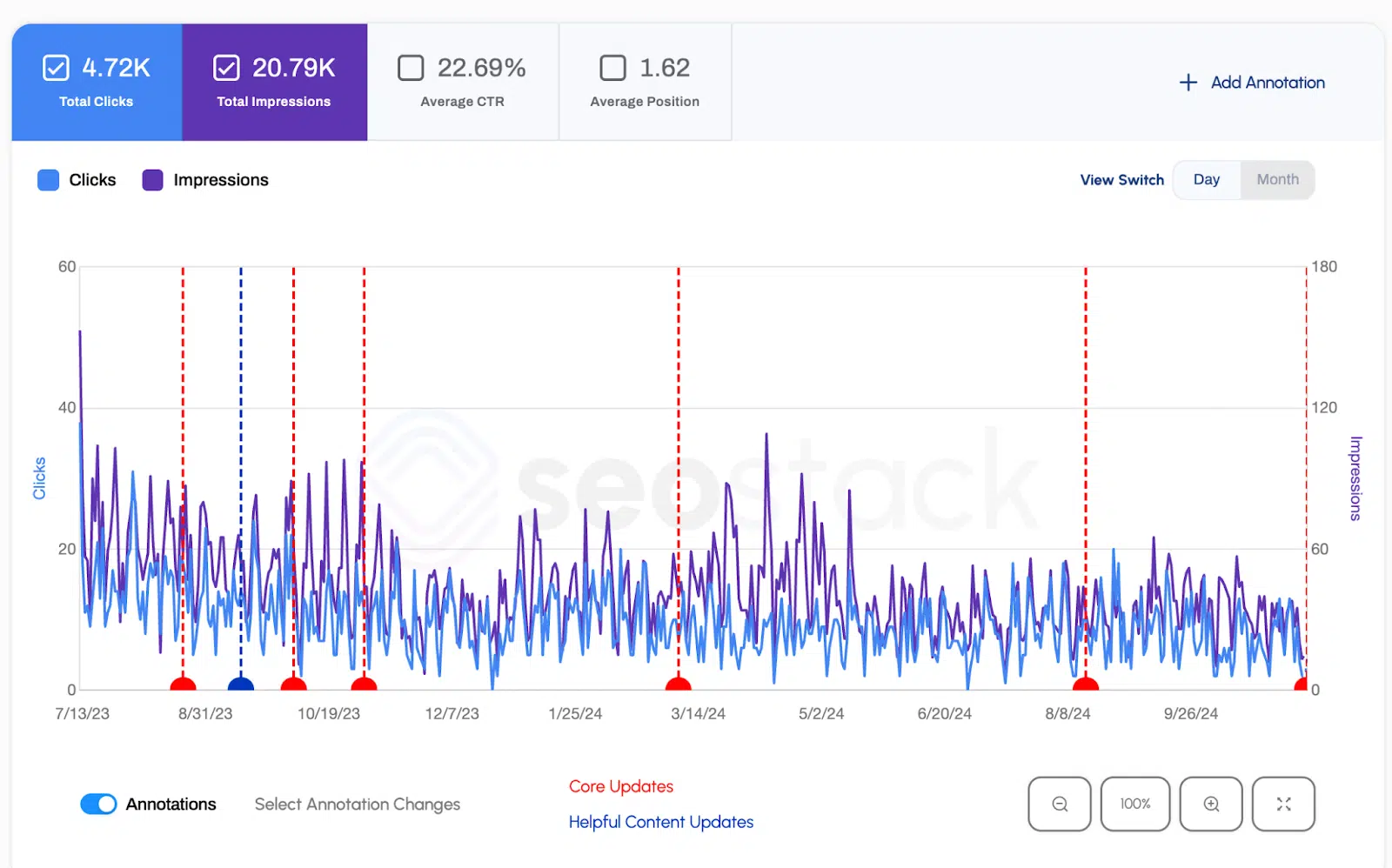

The August 2023 core upgrade first impacted the site, complied with by even much deeper losses after the handy web content upgrade.

These weren’t simply temporary ranking variations– they indicated a deeper issue.

Obviously, for a site whose main channel was SEO, this had a detrimental impact on profits.

The very first step was to assess the influence we were seeing right here.

- Is the website still rating for appropriate inquiries and simply dropping in settings?

- Or has it shed questions along with clicks?

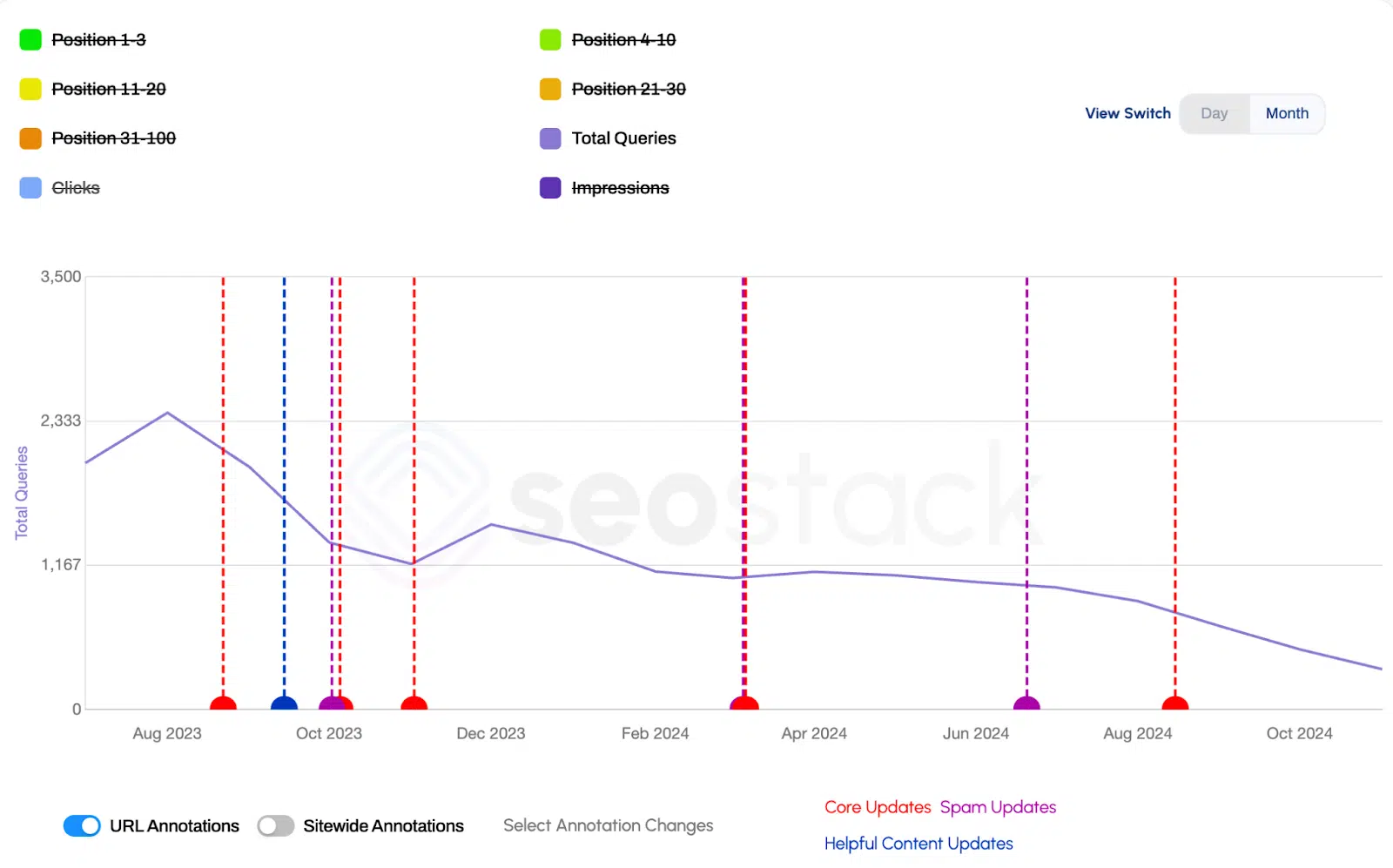

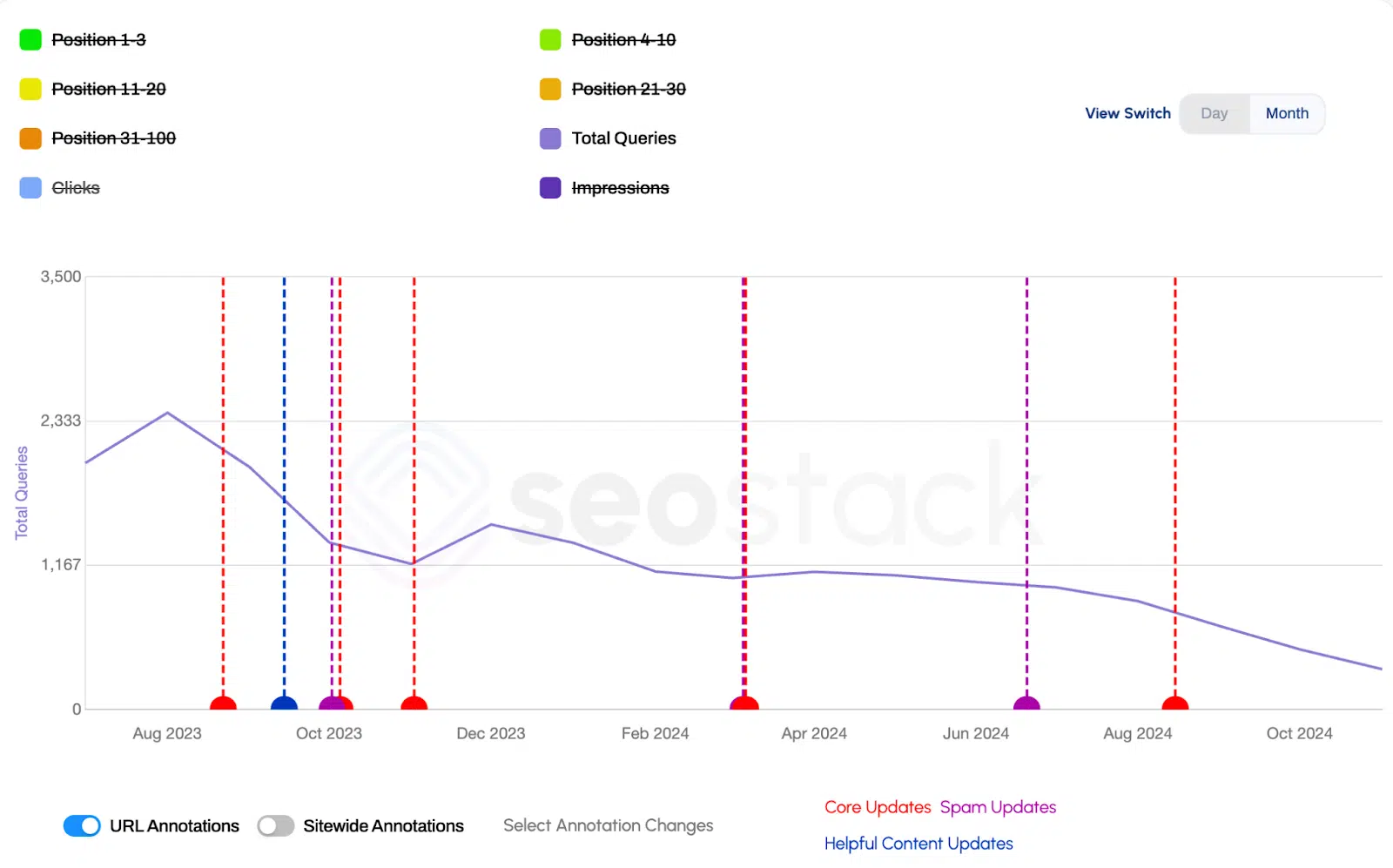

I’m a fan of Daniel Foley Carter’s structure for reviewing effect as slippage or devaluation, which is facilitated by his search engine optimization Heap device.

As he puts it:

- “Inquiry checking serves to review if a page is suffering from decrease or slippage– which is excellent if your website obtains spanked by HCU/ E-E-A-T infused Core Updates.”

The two situations are quite different and have different effects:

- Slippage : The page preserves query matters, yet clicks quit.

- Devaluation : The page queries reduce concurrently as questions drop to reduced setting groups.

Slippage means the content is still useful, yet Google has actually deprioritized it in favor of stronger rivals or intent shifts.

Devaluation is far more extreme– Google now sees the web content as low-quality or unimportant.

We analyzed an example of the top revenue-driving pages to understand if there is a pattern.

Out of the 30 web pages assessed, more than 80 % lost their total query counts and positions.

As an example, the page below was the most effective performer in both website traffic and income. The query decline was serious.

It is evident that the majority of pages have been cheapened, and the internet site all at once was seen as being of poor quality.

The cause behind the result

The customer had not gotten a hand-operated penalty– so what triggered the decrease?

A mix of low-quality signals and scaled content strategies made their site appear spammy in Google’s eyes.

Let’s explore the most significant warnings we found:

- Templated material and replication.

- Low site authority signals.

- Filled with air customer engagement.

- Misuse of Google’s Indexing API.

Templated web content and replication

The site in question had excessive templated material with substantial duplication, making Google (and me) believe the web pages were generated utilizing poor AI-enabled programmatic strategies.

One of the initial things we inspected was just how much of the site’s material was copied.

Remarkably, Howling Frog didn’t flag this as a major problem, even at a 70 % replication. Siteliner is what gave us better results– over 90 % replication.

Considering what I saw in the hand-operated check of a number of web pages, this was no surprise. Those pages were extremely similar.

Each piece had a clear layout they were following, and there was only very little distinction between the material.

When asked, the customer rejected utilizing automation but said AI was used. However, they urged their web content undertook QA and content evaluation.

Regrettably, the data told a various tale.

Site authority

From the Google API leakage, the DOJ trial, and understandings from a recent Google exploit, we understand that Google utilizes some form of site authority signal.

According to the manipulate, the high quality rating is based on:

- Brand name visibility (e.g., branded searches).

- User interactions (e.g., clicks).

- Support message relevance around the web.

Counting on short-term approaches can tank your website’s track record and score.

Brand signals

To recognize the impact on our brand-new client, we initially checked out the effect on brand name search vs. non-brand.

As presumed, non-brand search phrases nose-dived.

Brand was less affected.

This is expected given that branded keyword phrases are normally considered more protected than non-brand ones.

Besides, the intent there is strong. Those users search for your brand with a clear intent to engage.

It additionally commonly spends some time to see the impact of branded keyword phrases.

Several individuals searching for a certain brand name are repeat site visitors, and their search habits is less sensitive to temporary ranking variations.

Finally, brand web traffic is extra influenced by more comprehensive advertising efforts– such as paid advertisements, e-mail projects, or offline marketing.

However another thing stood out– the brand name’s presence really did not match its historical traffic fads.

We examined their top quality search trajectory vs. general web traffic, and the searchings for were telling:

- Their brand name acknowledgment was extremely low relative to website traffic quantity, also when considering that GSC samples information on a filteringed system record.

- In spite of their boosted presence and energetic social existence, branded searches revealed no purposeful growth curve.

We know that Google utilizes some sort of authority metrics for sites.

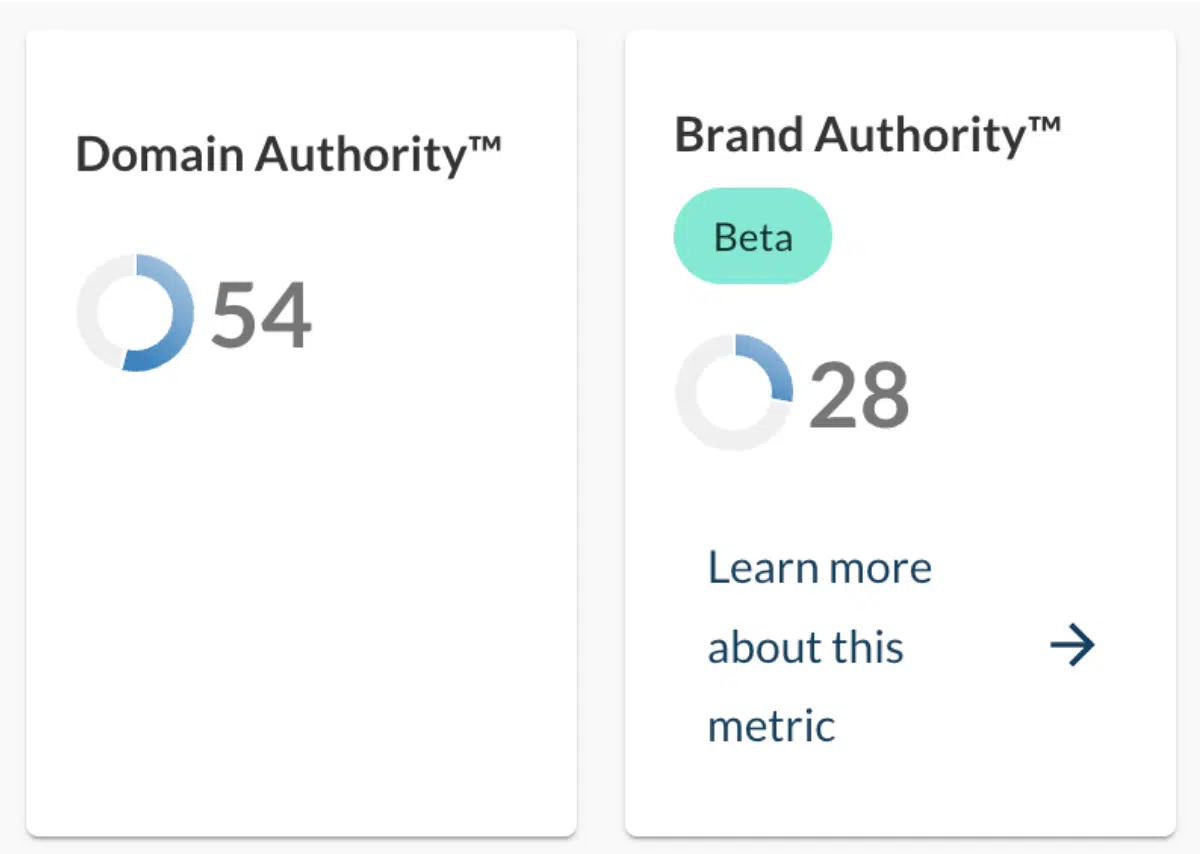

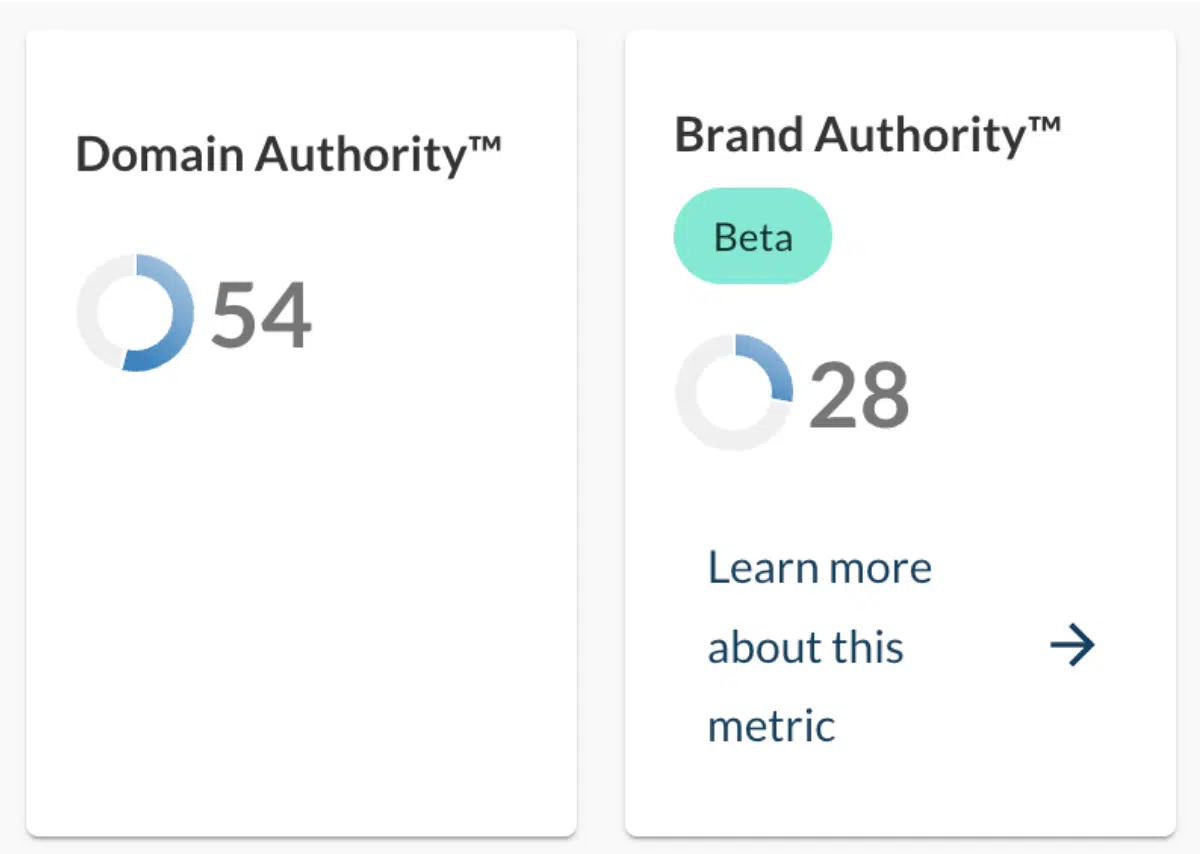

Several SEO devices have their very own matchings that attempt to emulate exactly how this is tracked. One instance is Moz, with its Domain Authority (DA) and, lately, Brand name Authority (BACHELOR’S DEGREE) metrics.

The DA rating has actually been around for ages. While Moz states it is a combination of various aspects, in my experience, the main motorist is the website’s web link profile.

BA, on the various other hand, is a metric released in 2023 It relates to a brand name’s broader on the internet impact and focuses on the stamina of a web site’s well-known search terms.

Allow’s be clear: I’m not encouraged that DA or bachelor’s degree are anywhere near to exactly how Google rates internet sites. They are third-party metrics, not confirmed ranking signals. And they should never be made use of as KPIs!

Nevertheless, the pattern seen for this client when taking a look at the brand data in GSC reminded me of both metrics, particularly the current Moz research on the impact of HCU (and the succeeding core site updates).

The research suggests that HCU may concentrate extra on stabilizing brand name authority with domain authority as opposed to exclusively evaluating the subjective helpfulness of content.

Web sites with high DA but low bachelor’s degree (about their DA) tend to experience demotions.

While the metrics are not to be taken as ranking signals, I can see the logic below.

If your site has a lots of links, however no one is looking for your brand name, it will certainly look shady.

This lines up with Google’s longstanding objective to stop “over-optimized” or “over-SEOed” websites from placing well if they lack genuine individual passion or navigational need.

Google Browse Console currently directed toward an issue with brand name assumption.

I wished to see if the DA/BA analysis aimed similarly.

The DA for this client’s website was high, while the bachelor’s degree was remarkably low. This made the site’s BA/DA proportion really high, about 1 93

The site had a high DA because of SEO initiatives however a low bachelor’s degree (suggesting restricted real brand interest or need).

This imbalance made the website show up “over-optimized” to Google with little brand name need or customer commitment.

Dig deeper: 13 inquiries to detect and fix decreasing natural website traffic

Customer involvement

The following step was to explore individual involvement.

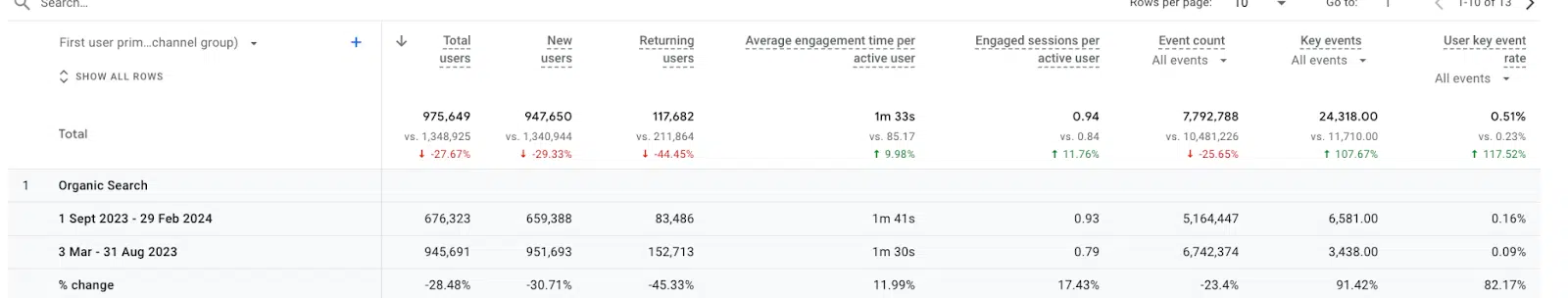

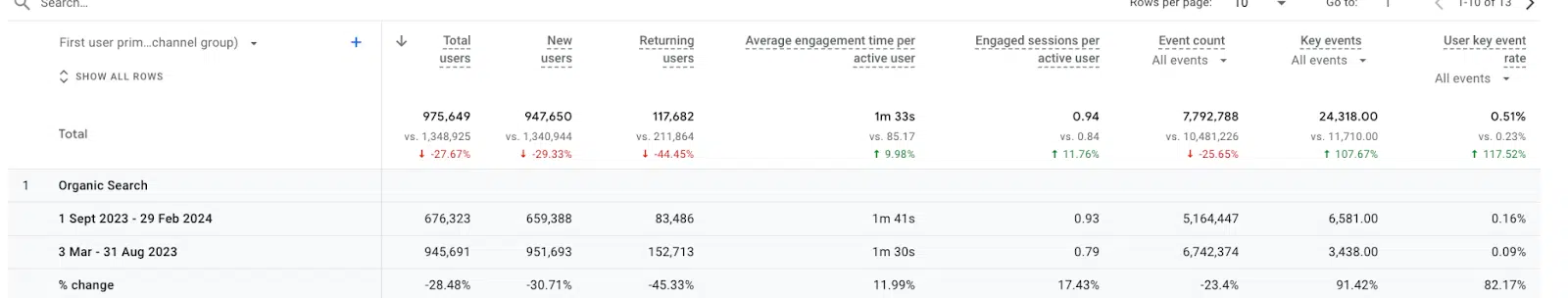

We first compared organic customers in GA 4– six months prior to and after the August 2023 update.

Traffic was down for both brand-new and returning individuals, however a lot of the involvement indicators were up, besides occasion matters.

Was it merely that fewer site visitors were concerning the site, however those that reached it found the material better than prior to?

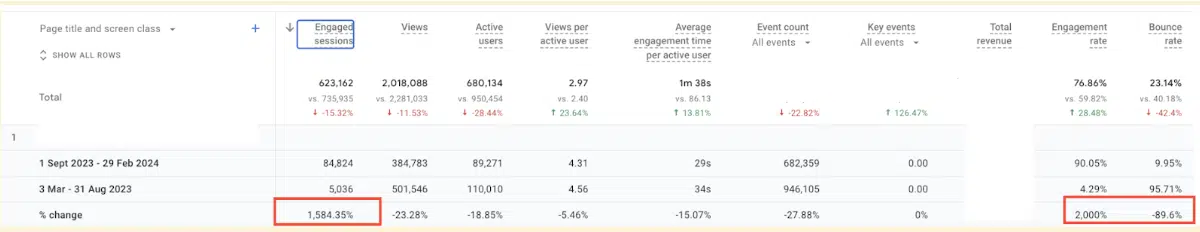

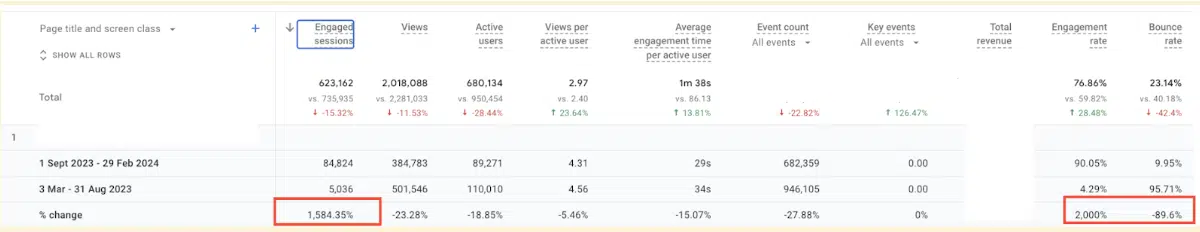

To dig much deeper, we looked at an individual web page that shed rankings.

Their toughest performing page pre-update disclosed:

- Involved sessions were up 1, 000 %.

- The involvement price boosted 2, 000 %.

- Bounce price stopped by 89 %.

( Note : While these metrics can be useful in situations similar to this, they shouldn’t be completely trusted. Bounce rate, in particular, is tricky. A high bounce price doesn’t always indicate a web page isn’t valuable, and it can be conveniently controlled. Tracking significant interaction with custom events and audience data is a better strategy.)

In the beginning glimpse, these may seem like positive signs– yet actually, these numbers were likely unnaturally pumped up.

Strategies such as click adjustment are not brand-new. Nonetheless, with the findings from Google’s API leakage and exploit data, I fear they can end up being more appealing.

Both the leakage and the API have actually triggered a misconception of what engagement signals suggest as part of exactly how Google rates web sites.

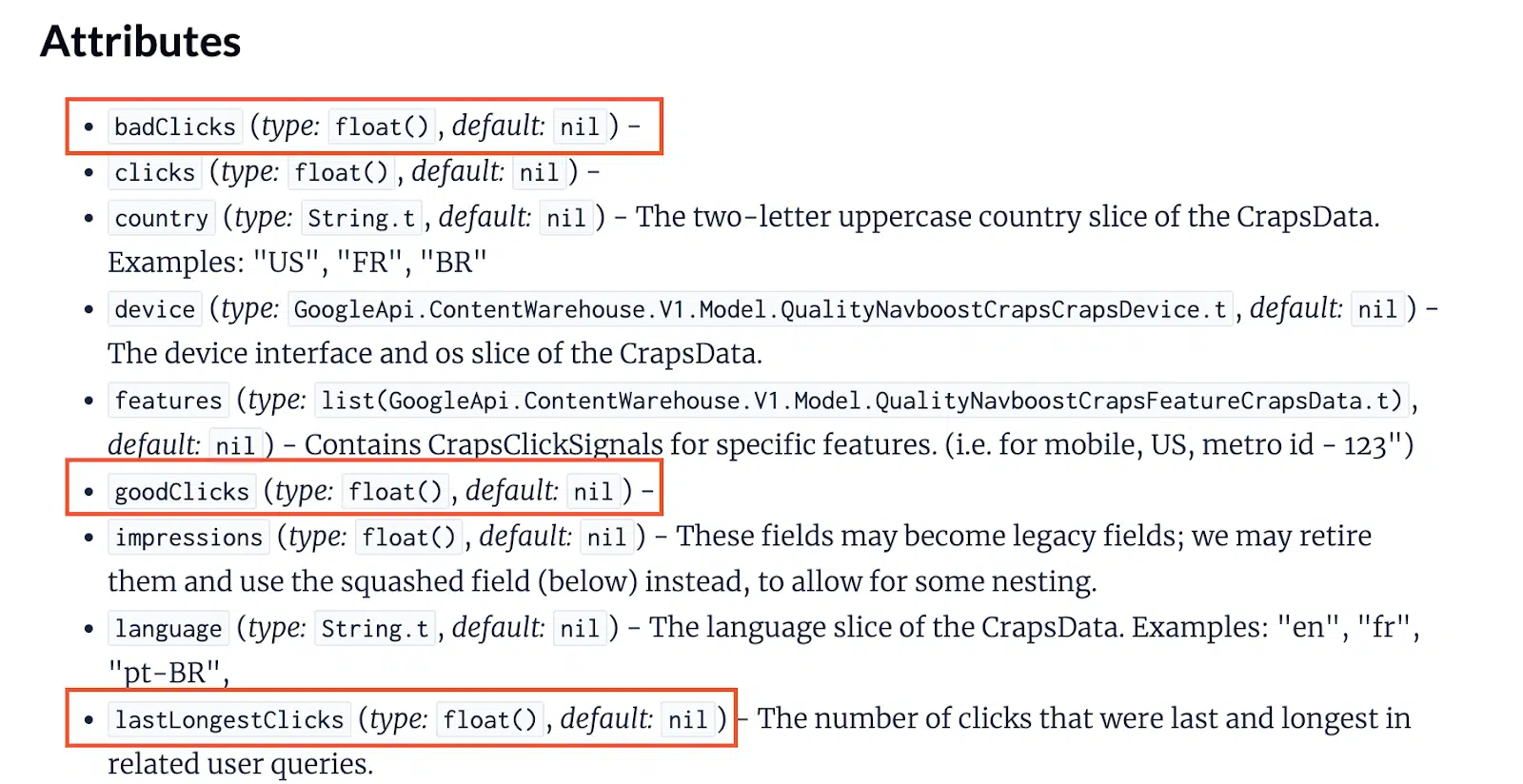

From my understanding, how NavBoost tracks clicks is a lot more complex. It’s not nearly the variety of clicks it has to do with the high quality.

This is why features from the leakage include aspects such as badClicks, goodClicks, and lastLongestClicks.

And, as Candour’s Mark Williams-Cook stated at the recent Browse Norwich :

- “If your site has a meteoric surge, yet nobody has actually come across you– it looks shady.”

Blowing up engagement signals instead of focusing on genuine involvement will not generate long-term outcomes.

Using this technique after the decrease simply confirms why the rankings should have dropped in the first place.

Unsurprisingly, the rankings kept declining for this customer despite the short-term spikes in engagement.

( Note : We could have likewise checked out other variables, such as spikes in customer areas and IP evaluation. Nevertheless, the objective had not been to catch the customer utilizing dangerous methods or place condemn– it was to aid them avoid those errors in the future.)

Misusing the Google Indexing API

Also before starting this project, I spotted some red flags.

One significant problem was the abuse of the Google Indexing API in Search Console to quicken indexing.

This is only planned for task publishing websites and sites organizing live-streamed occasions.

We also understand that the submissions undertake strenuous spam detection

While Google has claimed misuse won’t directly injure positions, it was another signal that the site was participating in risky techniques.

The issue with scaling fast making use of risky strategies

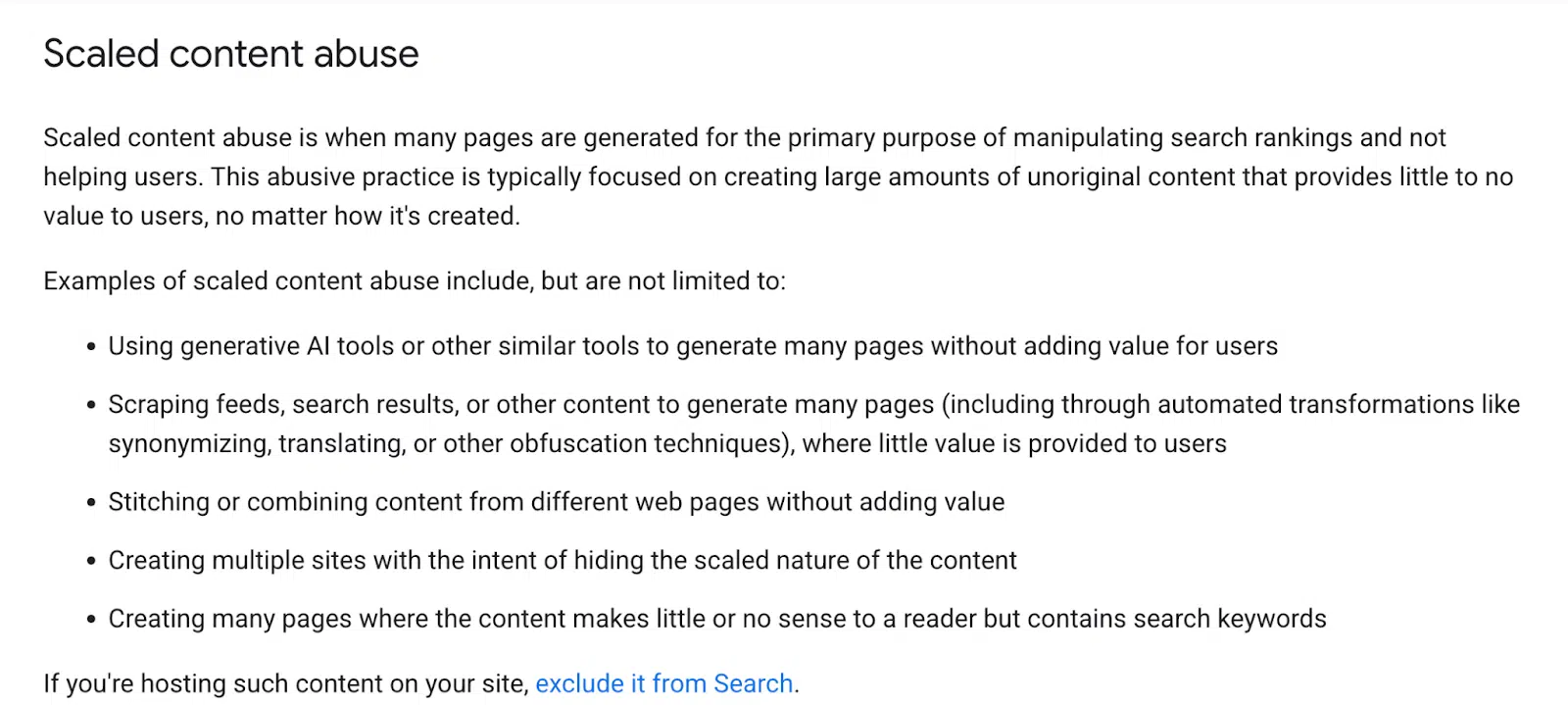

In 2024, Google updated its Spam policy to reflect a more comprehensive understanding of material spam.

What familiar with be an area on “spammy automatically produced material” is currently packed right into the section on “scaled web content abuse.”

Google has actually changed its focus from how material is generated to why and for whom it is developed.

The range of manufacturing and the intent behind it now play a crucial duty in establishing spam.

Manufacturing low-value web content– whether AI-generated, automated, or by hand created– is, and must be, taken into consideration spam.

Unsurprisingly, in January 2025, Google upgraded the Browse High quality Rater Guidelines to straighten with this technique.

To name a few adjustments, the Page Top Quality Lowest and Low areas were revised.

Especially, a new area on Scaled Content Misuse was presented, showing elements of the Spam Plan and strengthening Google’s position on mass-produced, low-value material.

This client didn’t receive a hands-on action, however it was clear that Google had actually flagged the site as Lower quality.

Scaling quick this way is unsustainable, and it also opens bigger difficulties and duties for us as internet individuals.

I just recently had a person in the industry share a dazzling allegory for the threat of the proliferation of AI-generated web content:

For the tons of individuals draining AI web content with very little oversight– they’re just peeing in the area swimming pool.

Ingesting large amounts of text only to throw up similar material as a modern matching of old-school article-spinning (a prominent spam method of the very early 2000 s).

It jumbles the electronic area with low-value material and weakens count on online information.

Directly, that’s not where I ‘d want the internet to go.

Obtain the e-newsletter search online marketers count on.

How to do it in a different way

The increase of AI has actually increased programmatic SEO and the press to scale quickly, yet even if it’s very easy does not mean it’s the right approach.

If I had actually been entailed from the beginning, I understand I would have taken a different approach. But would certainly that suggest the strategy no longer qualifies as programmatic? Likely.

Can AI-powered programmatic SEO genuinely scale effectively? Honestly, I’m not sure.

What I do recognize is that there are useful lessons to eliminate.

Here are a few insights from this task and the suggestions I would certainly have provided if this customer had actually dealt with us from the start.

Begin with the goal in mind

We have actually all heard it previously, yet some still misread: Regardless of exactly how you range, the objective must always be to offer individuals, not internet search engine.

SEO ought to be a result of terrific content– not the goal.

In this instance, the client’s purpose was vague, however the information recommended they were manufacturing pages just to rank.

That’s not a method I would certainly have advised.

Concentrate on developing practical, one-of-a-kind web content (also if templated)

The concern had not been the use of layouts, but the absence of purposeful distinction and details gain.

If you’re scaling with programmatic SEO, make sure those pages truly serve users. Below are a couple of means to do that:

- Guarantee each programmatic page offers an one-of-a-kind customer benefit, such as experienced commentary or actual tales.

- Use vibrant content obstructs as opposed to duplicating design templates.

- Include data-driven insights and user-generated web content (UGC).

Directly, I love making use of UGC as a way to scale rapidly without compromising high quality, triggering spam signals, or contaminating the digital ecological community.

Tory Gray shares some wonderful examples of this approach in our SEOs Getting Coffee podcast.

Avoid over-reliance on AI

AI has unbelievable possibility when used sensibly.

Over-reliance is a threat to lasting organization development and impacts the more comprehensive internet.

In this instance, the customer’s data strongly suggested their content was AI-generated and immediately developed. It did not have deepness and differentiation.

They should have combined AI with human experience, integrating actual insights, case studies, and industry knowledge.

Focus on brand

Invest in brand-building before and along with SEO due to the fact that authority matters.

Long-term success comes from constructing brand name recognition, not just going after positions.

This customer’s brand name had low acknowledgment, and its well-known search website traffic revealed no all-natural growth.

It was clear search engine optimization had taken top priority over brand name growth.

Strengthening brand authority additionally means expanding acquisition channels and reinforcing individual signals beyond Google rankings.

In a time when AI Overviews are absorbing web traffic, this can be the distinction in between flourishing and shutting down.

Prevent clear offenses of Google’s guidelines

Some Google standards are open to analysis, but several are not. Do not push your luck.

Disregarding them will not function and will just add to tanking your domain name.

In this situation, rankings dropped– most likely permanently– due to the fact that the client’s practices contravened Google’s plans.

They misused the Google Indexing API, sending out spam signals to Google. Their engagement metrics revealed abnormal spikes.

And those were just a couple of warnings. The celebration mored than, and all that remained was a scorched domain name.

Dig deeper: Exactly how to evaluate and take care of traffic declines: A 7 -step structure

Adding authors are invited to produce web content for Search Engine Land and are selected for their know-how and contribution to the search community. Our factors work under the oversight of the content team and payments are checked for top quality and significance to our visitors. The viewpoints they share are their own.