“Blocked by robots.txt.”

“Acquisown, though blocked by robots.txt.”

These two reactions of the Google Search Console have divided SEO professionals as the Google Search Console (GSC) error report has become one thing.

It should be settled once and for all. On the game.

What is the difference between robots.txt 'vs.' sequential ', although blocked by robots.txt'?

There is a major difference between “blocked by robots.txt” and “blocked by” sequenced “, although blocked by robots.txt. ,

Acting.

“Blocked by robots.txt” means that your URL will not appear in Google Search.

“Acquisown, though blocked by robots.txt” means that your URLs are indexed and will appear in Google Search, even if you have tried to block the URL in the robots.Txt file.

My url is In fact Blocking from the search engine if I rejected it in robots.txt file?

not answer.

If you reject the URL in robots.txt file, no URL is completely blocked by the search engine sequencing.

SEO professionals and these Google Search Console Errors are the scottil that the search engines do not completely ignore your URL if it is listed as a dyslo and blocked in the robots .XT file.

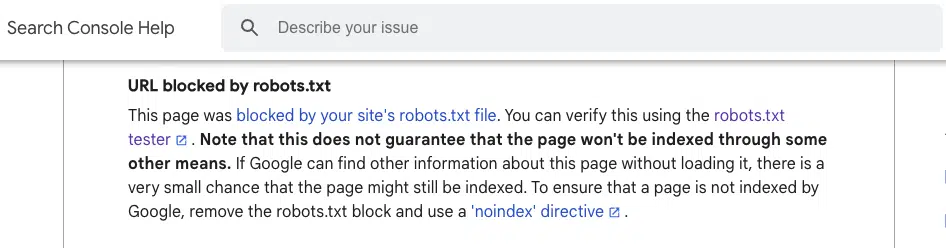

In his assistance documents, Google said Does not Guarantee The page will not be indexed if it is blocked by robots.txt.

I have seen that it happens on websites I manage, and other SEO professionals too.

Lily Ray shared how to blocked pages by robots.txt files Ai appears in AI overview with a snipet,

This is just in: in the block -blocked page. TXT AI is eligible to appear in the overview. With a snipet.

Generally, when Google acts on a blocked pages in its search results, it shows “no information available for this page” in the details.

But with AIO, apparently google shows … pic.twitter.com/jrlswwgjh9

– Lily Re ((@Lilyraynyy) November 19, 2024

Ray continues to show Examples from goodsAn URL is currently blocked by robots.txt.

Some I see a lot in AIO: It seems that when a certain site is considered as a good resource on the subject, that site can find 3-5 links within the AIO.

In this example, 5 separate URLs in Goodrade are quoted in reaction (including currently blocked by robots.) pic.twitter.com/akilxvrk8v

– Lily Re ((@Lilyraynyy) November 19, 2024

Patrick Stocks highlighted an URL blocked by robots. Txt can be indexed If there are links pointing to the URL,

The blocked pages by robots.txt can be indexed and served on Google if they have links indicating them.@danielwaisberg Can you clarify this in a live test warning in GSC? pic.twitter.com/6aybweu8bf

– Patrick Stocks (@Patrickstox) 3 February, 2023

How do I fix 'blocking by robots' in Google Search Console?

Manually review all the pages flagged in 'blocked by robots'.

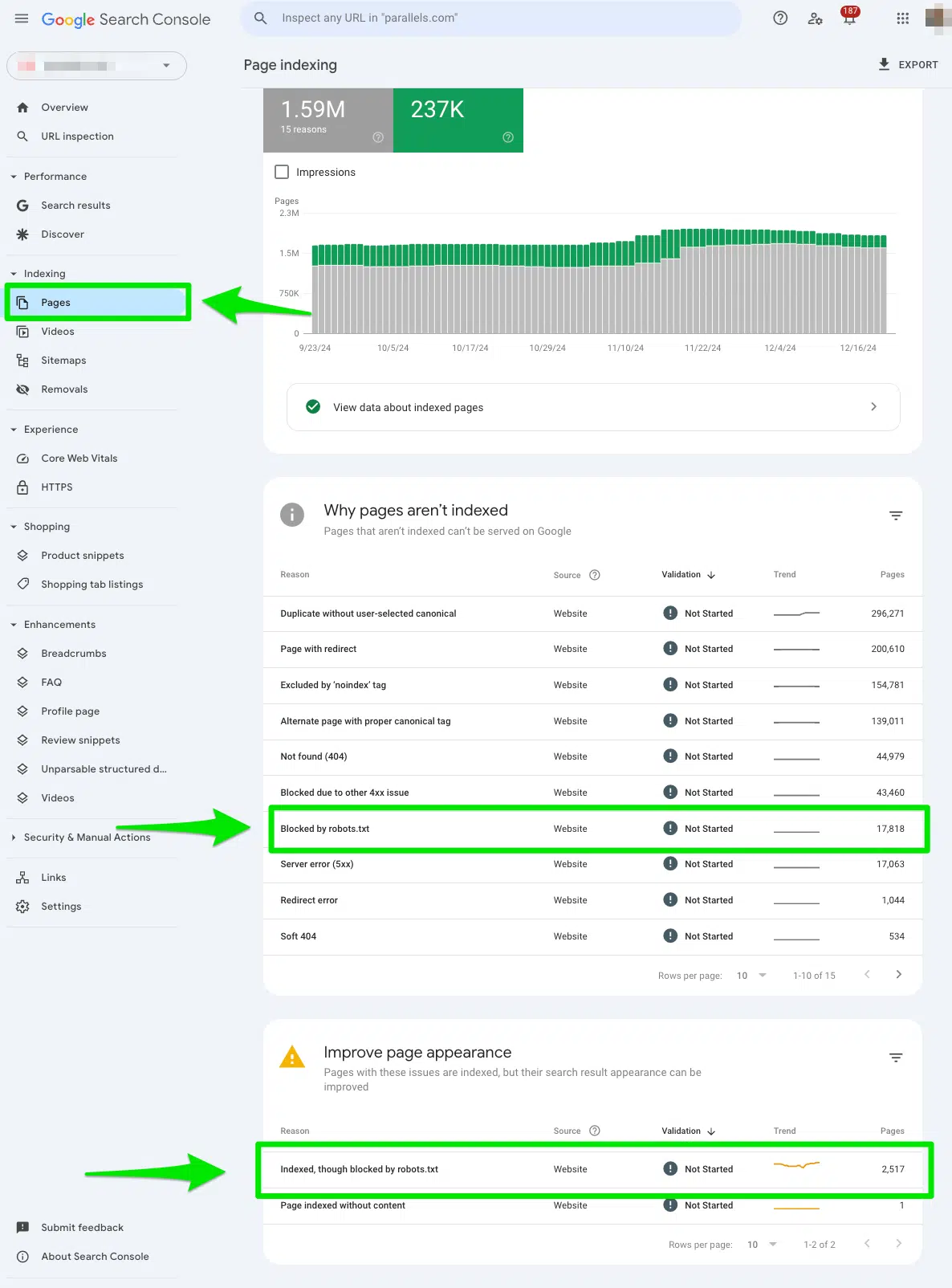

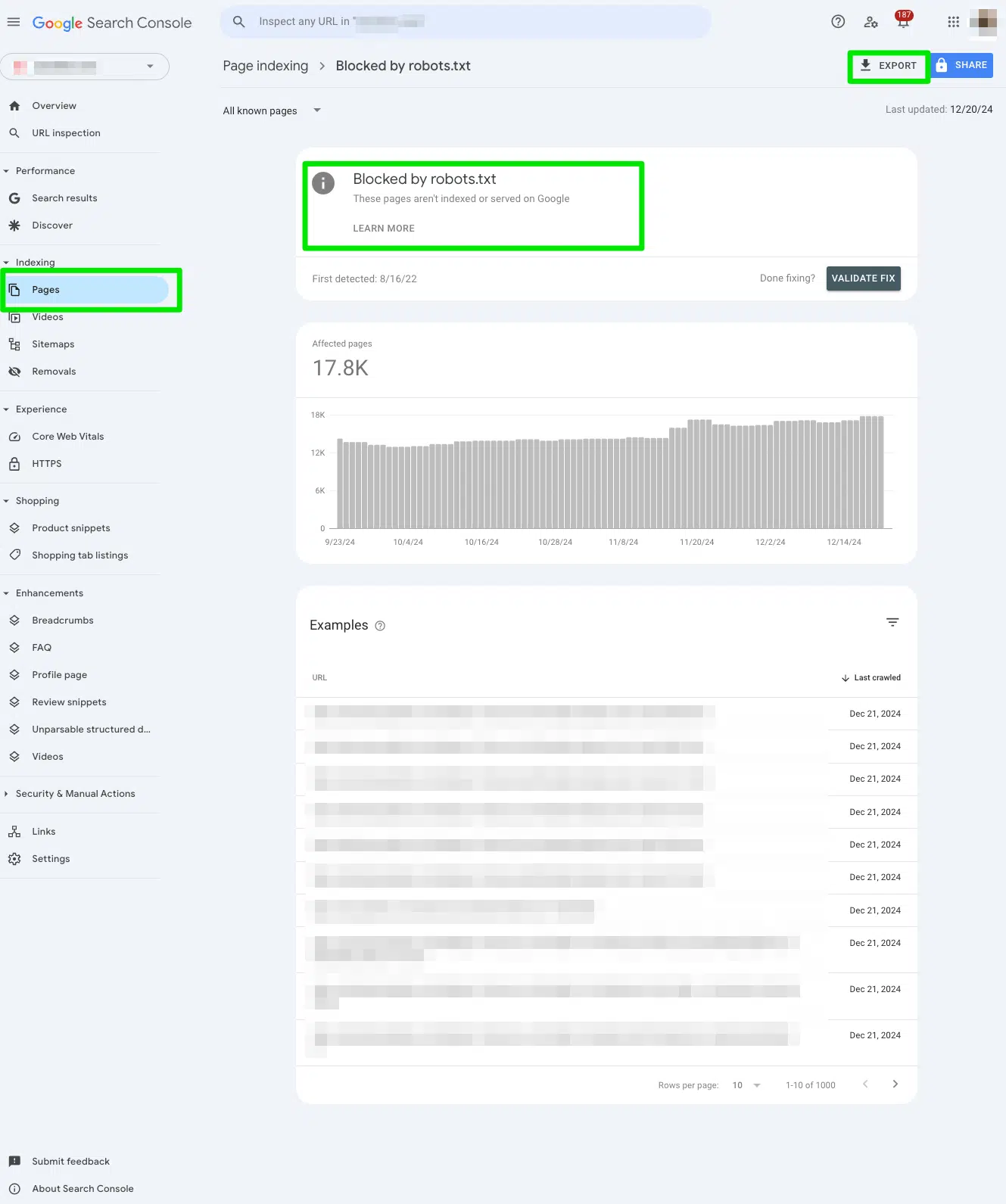

First, I manually reviewed all the pages flagged in the Google Search Console, which was blocked in the “blocked by robots”.

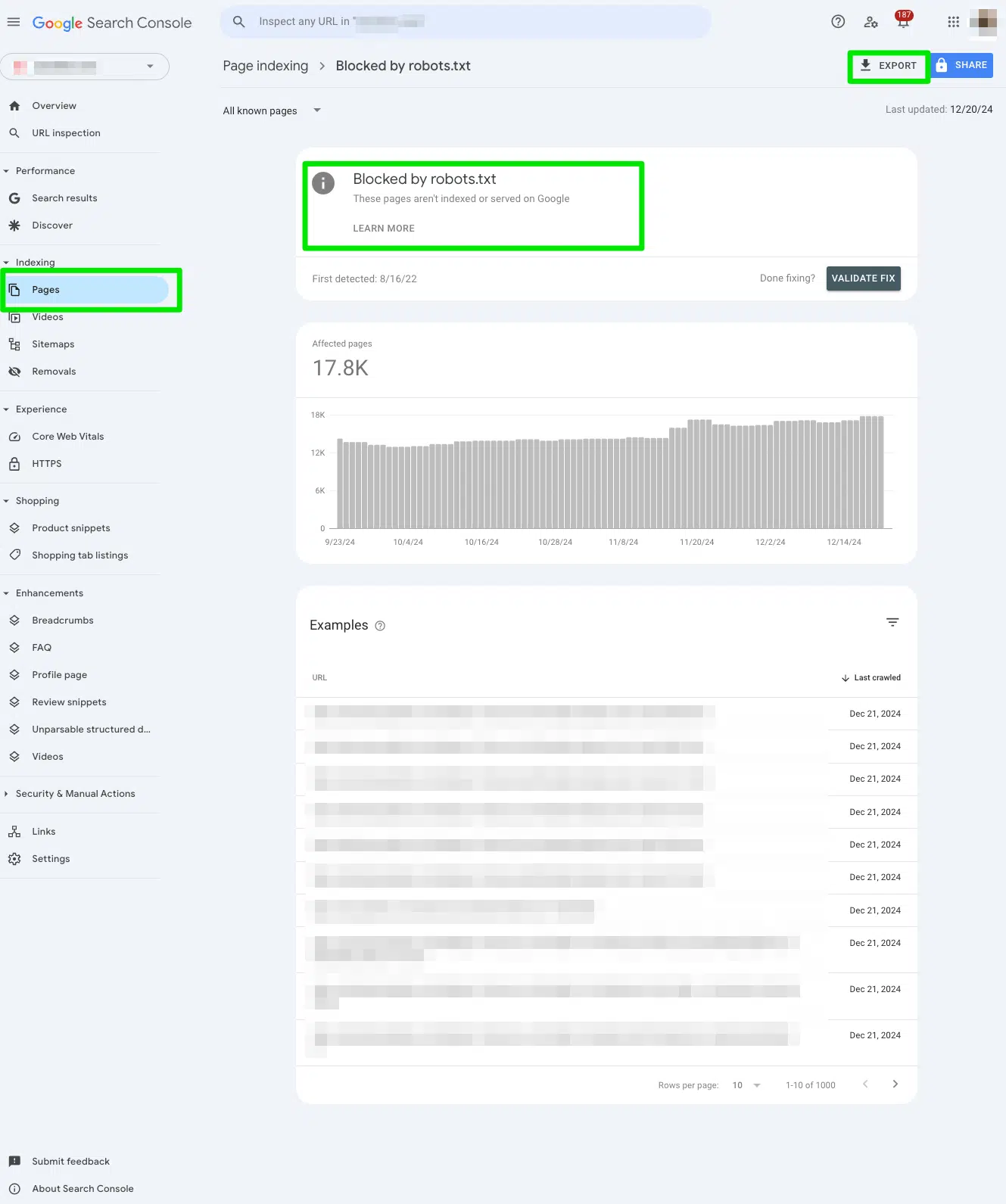

To reach the report, go to Google Search Console> Page> And look under the section Bushed by robots.txt.

Then, export data to Google Sheets, Excel or CSV to filter it.

Determine whether you are to block the URL from the search engine

Scan your export document for high priority URLs that are seen by the search engine.

When you see the error “blocked by Robots.txt” the error, it asks Google not to crawl to the URL because you applied an rejected instruction in robots.txt file for a specific purpose.

It is completely normal to block a URL from the search engine.

For example, you can block thanks from the search engine. Or the lead generation page was only for sales teams.

Your goal as an SEO professional is to determine whether the URLs listed in the report are actually to be blocked and avoided by search engines.

If you have deliberately added dyslo to robots, the report will be accurate, and will not require any action at your end.

If you have added dyslo to robots on an accident, keep reading.

If you mistakenly connect it by mistake, remove the dyslo instruction from the robot.

If you mistakenly added an rejected instructions to a URL, then manually remove the dyslo instruction from the robots.txt file.

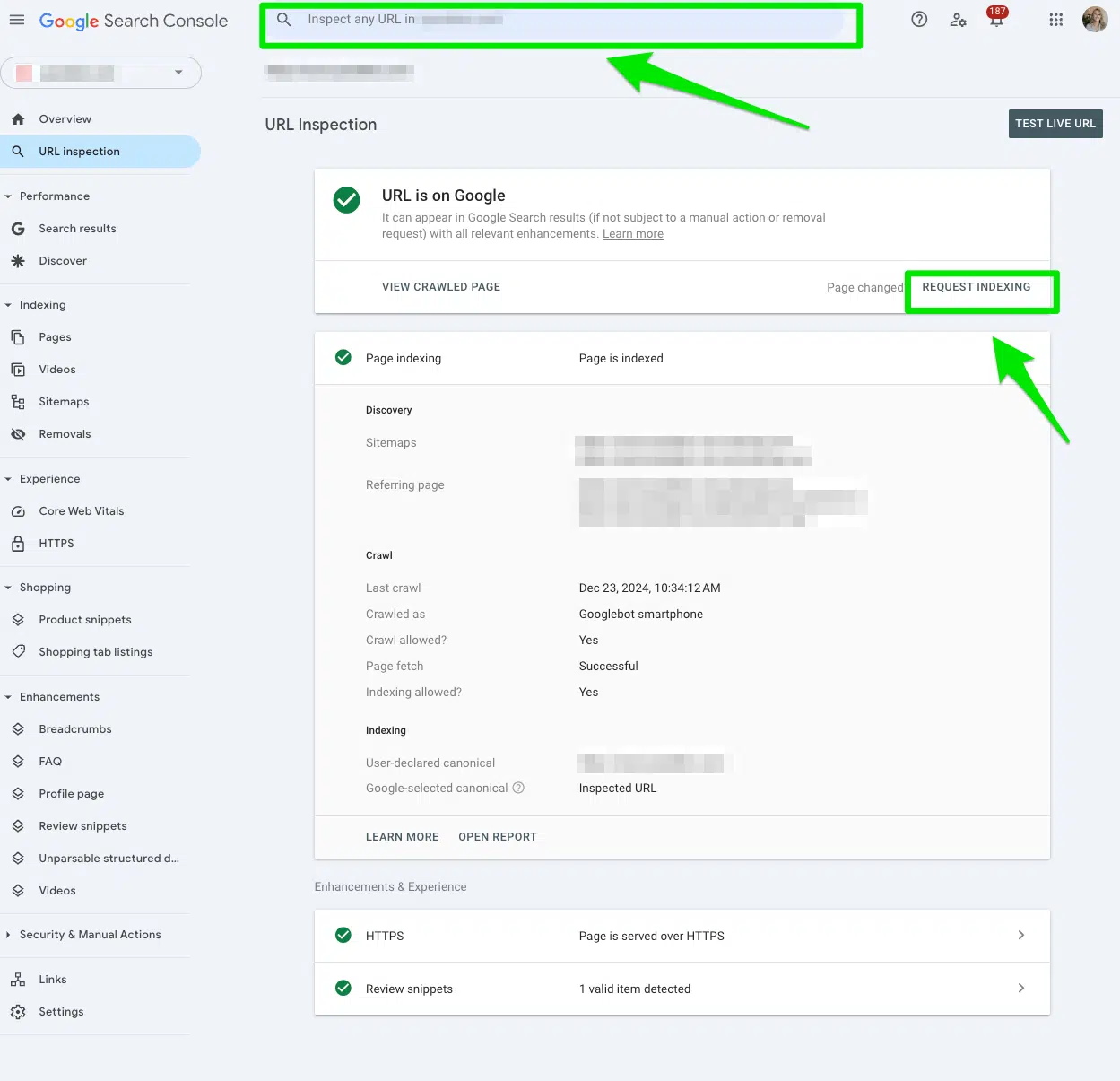

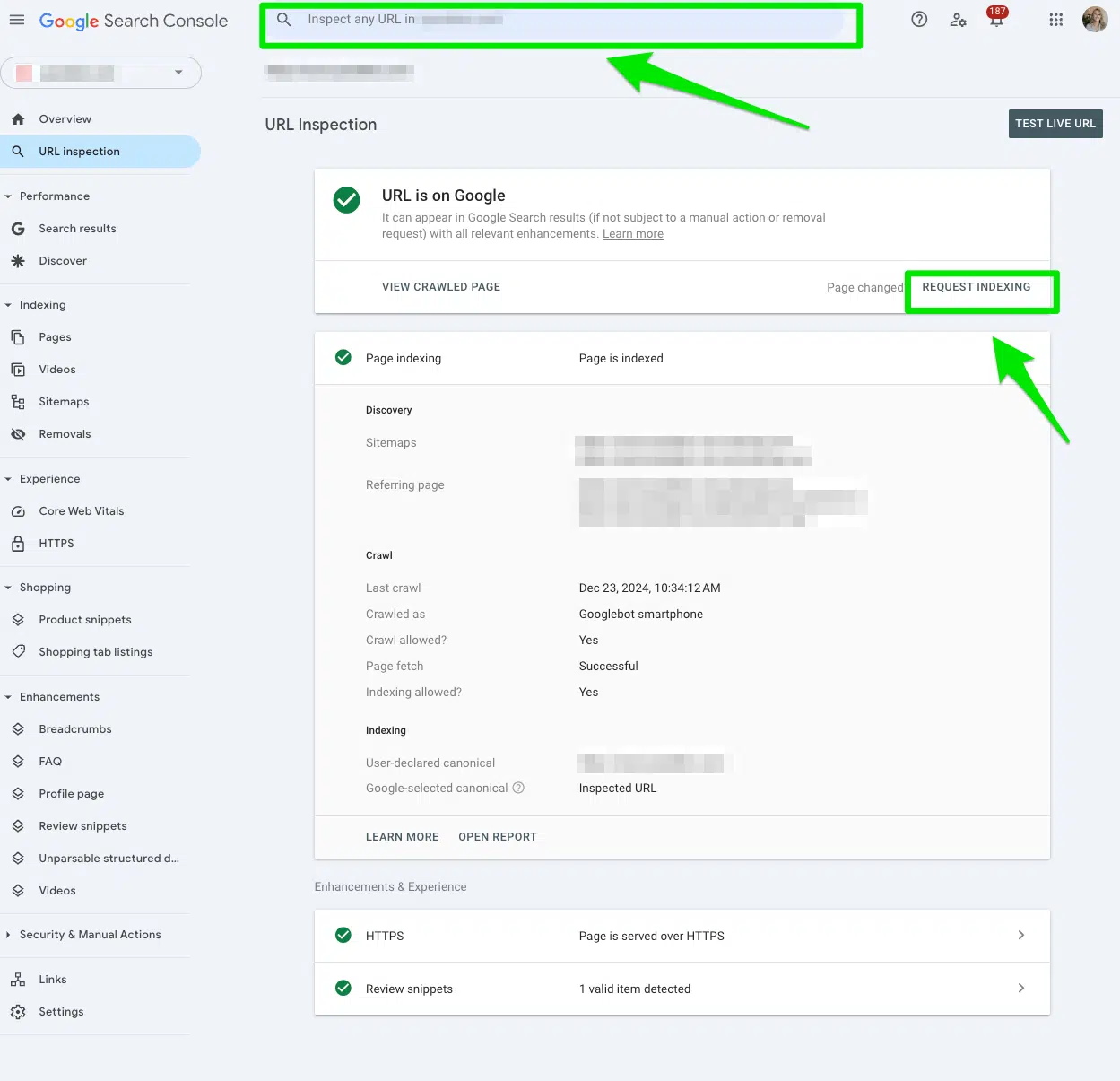

After removing the dyslo instruction from the robots.txt file, submit the URL Inspection URL Google search on top of the console.

Then, click Request sequencing,

If you have several URLs under a complete directory, start the first directory with URL. This will have the biggest impact.

The goal is to recover these pages and re -index the URL.

Request your robot to rebuild. TXT file

Another way to sign google to crawl your mistake is another way to crawl Request a recall Google Search Console.

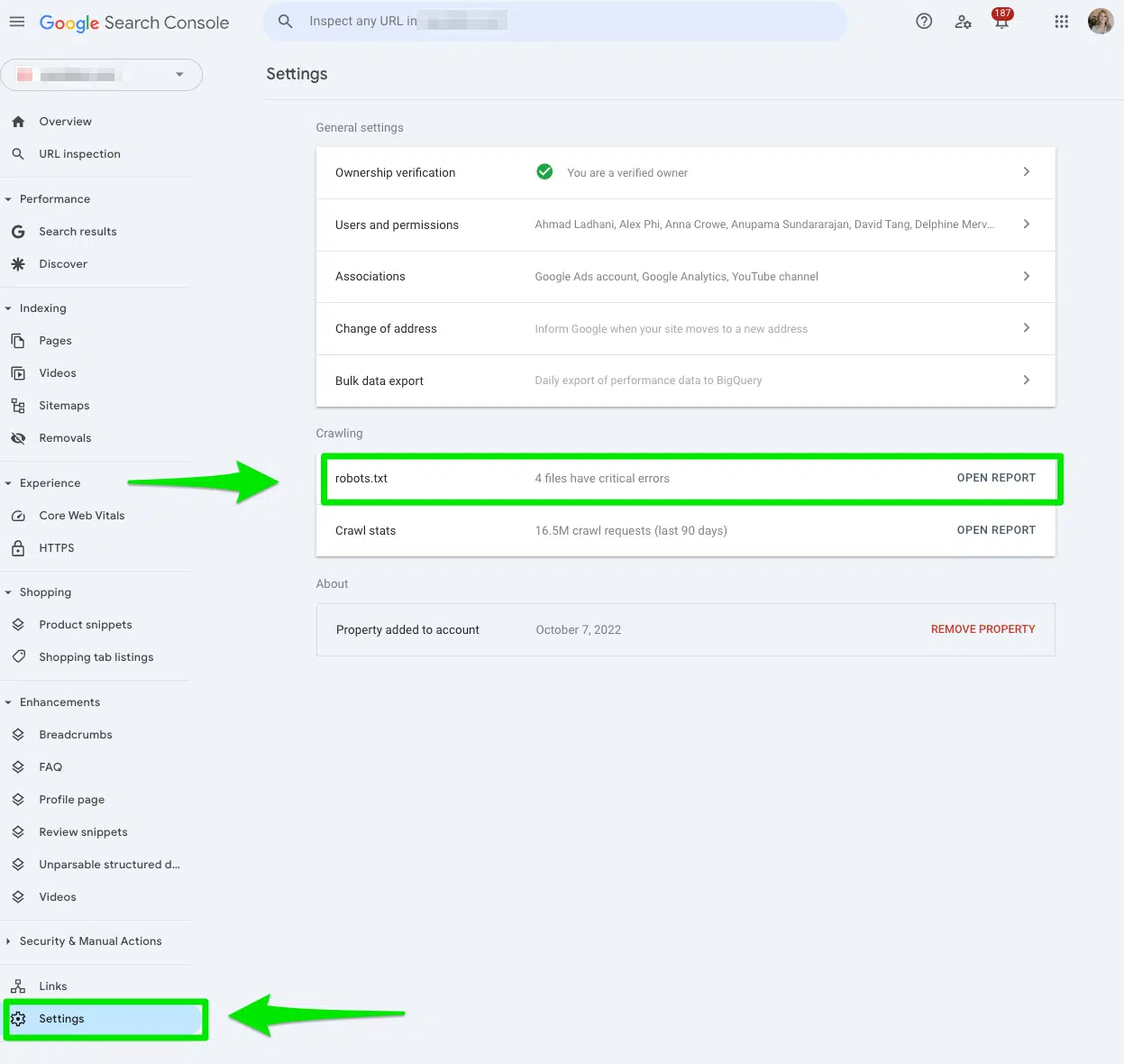

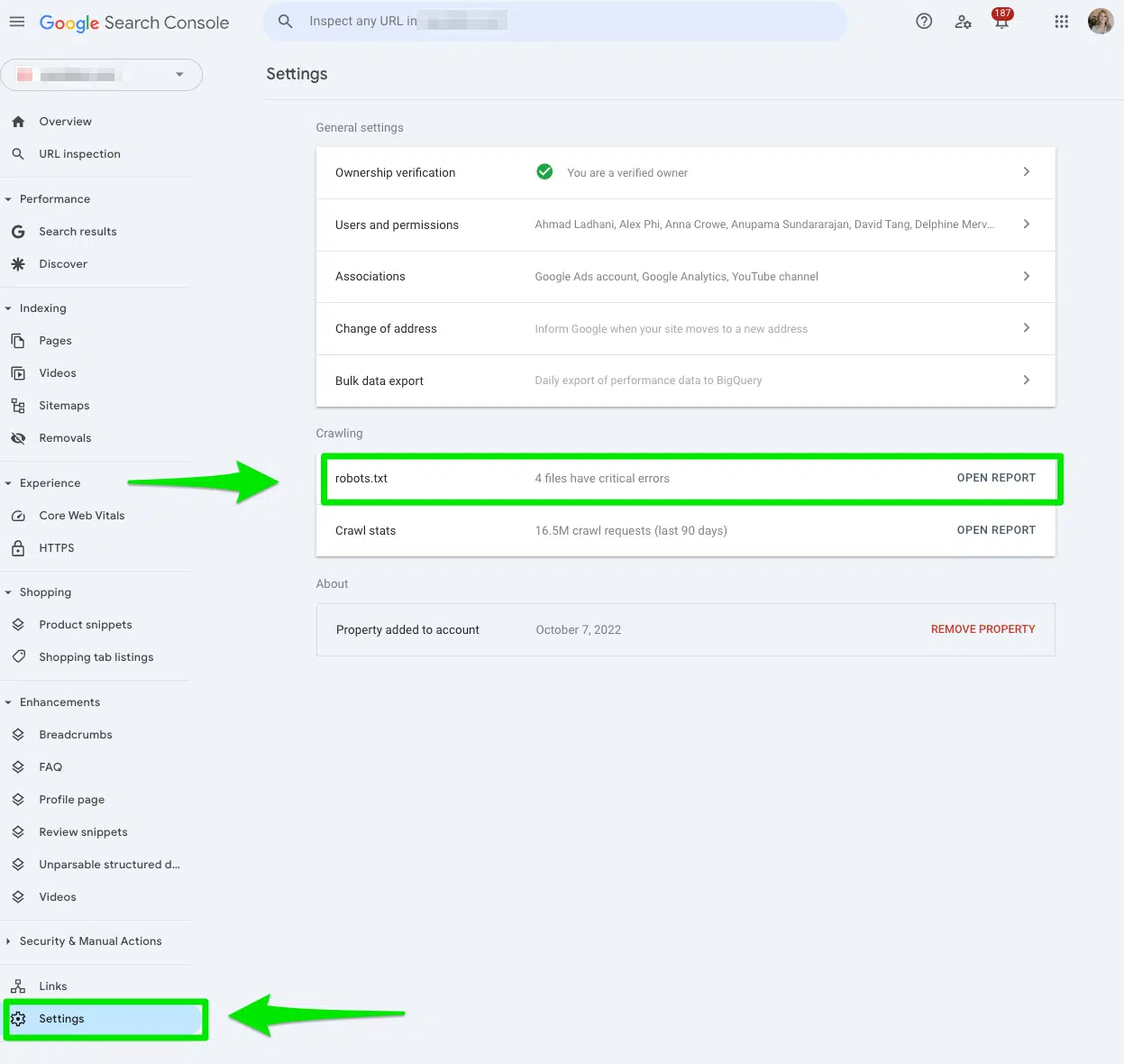

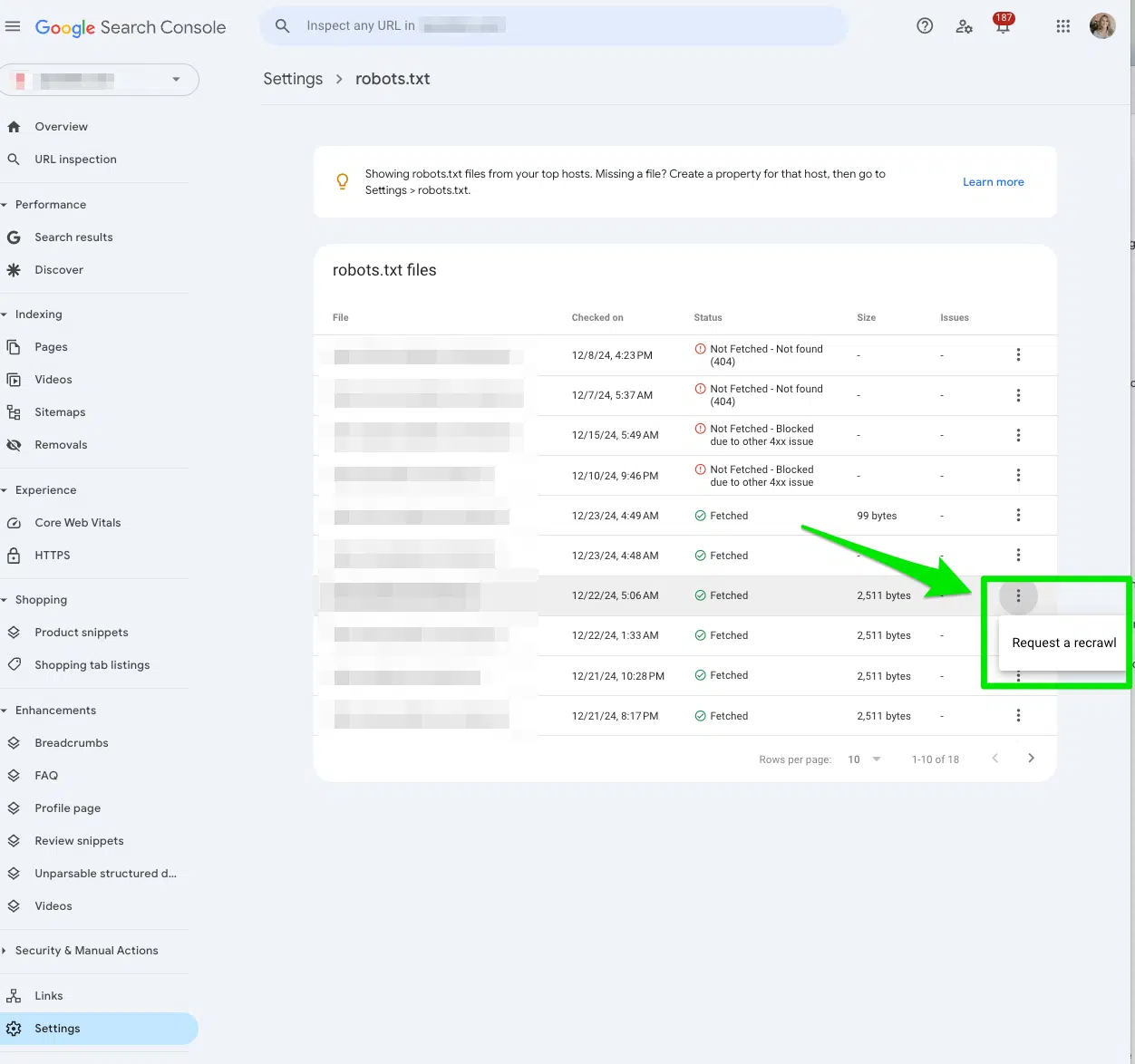

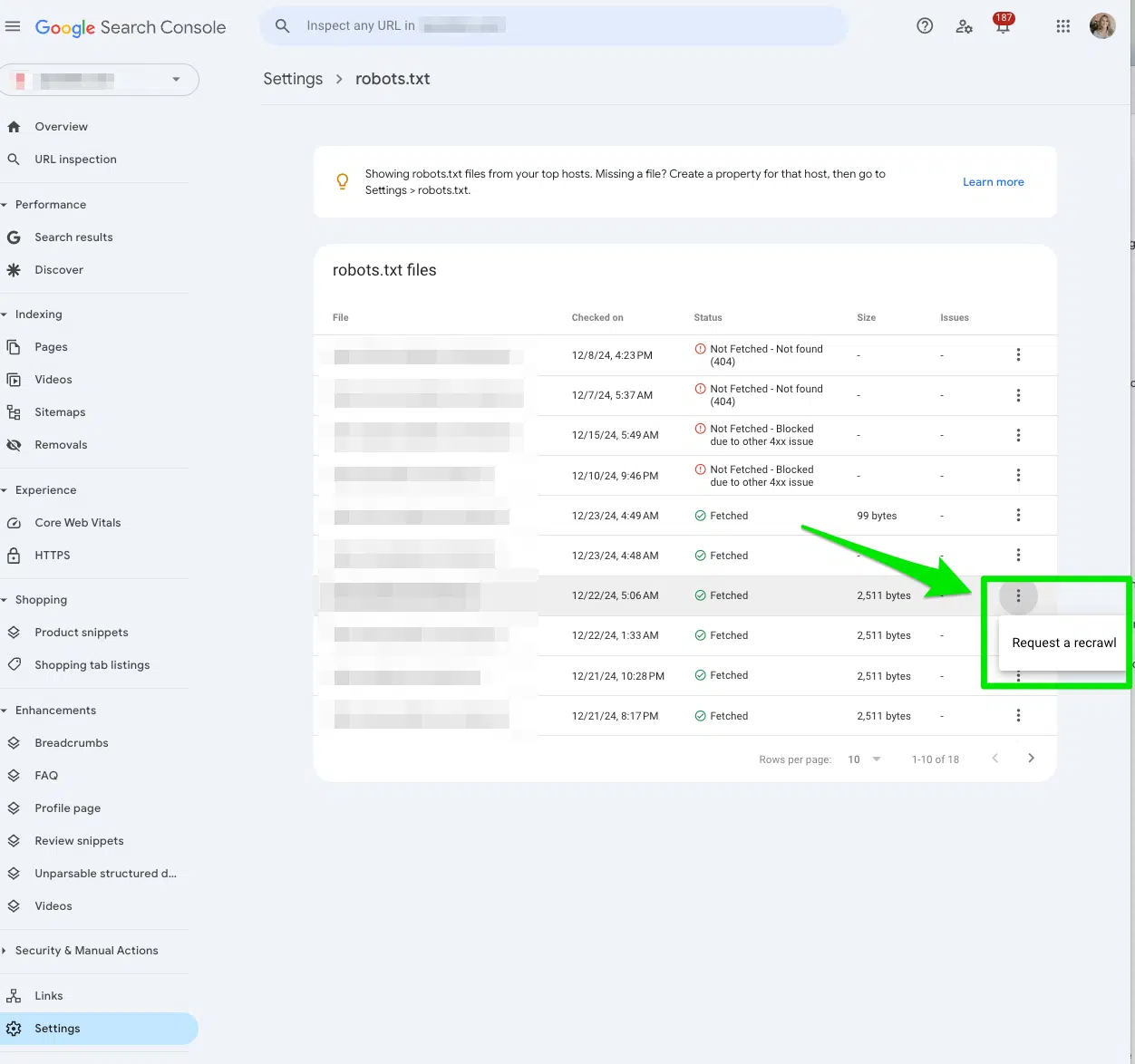

Google Search Console, Go to Settings> Robots.TXT.

Then, select three dots next to the robots.txt file you want to replace and choose Google again Request a revival.

Track before and after performance

Once you clean your robot. TXT files the file instructions, and have deposited your URL to recover, then your robot .txt to check when finally update the file. Use the Wayback Machine.

This can give you an idea of the potential impact of the dyslo instruction on a specific URL.

Then, after acting the URL, report on the performance for at least 90 days.

Trust the newsletter search markets.

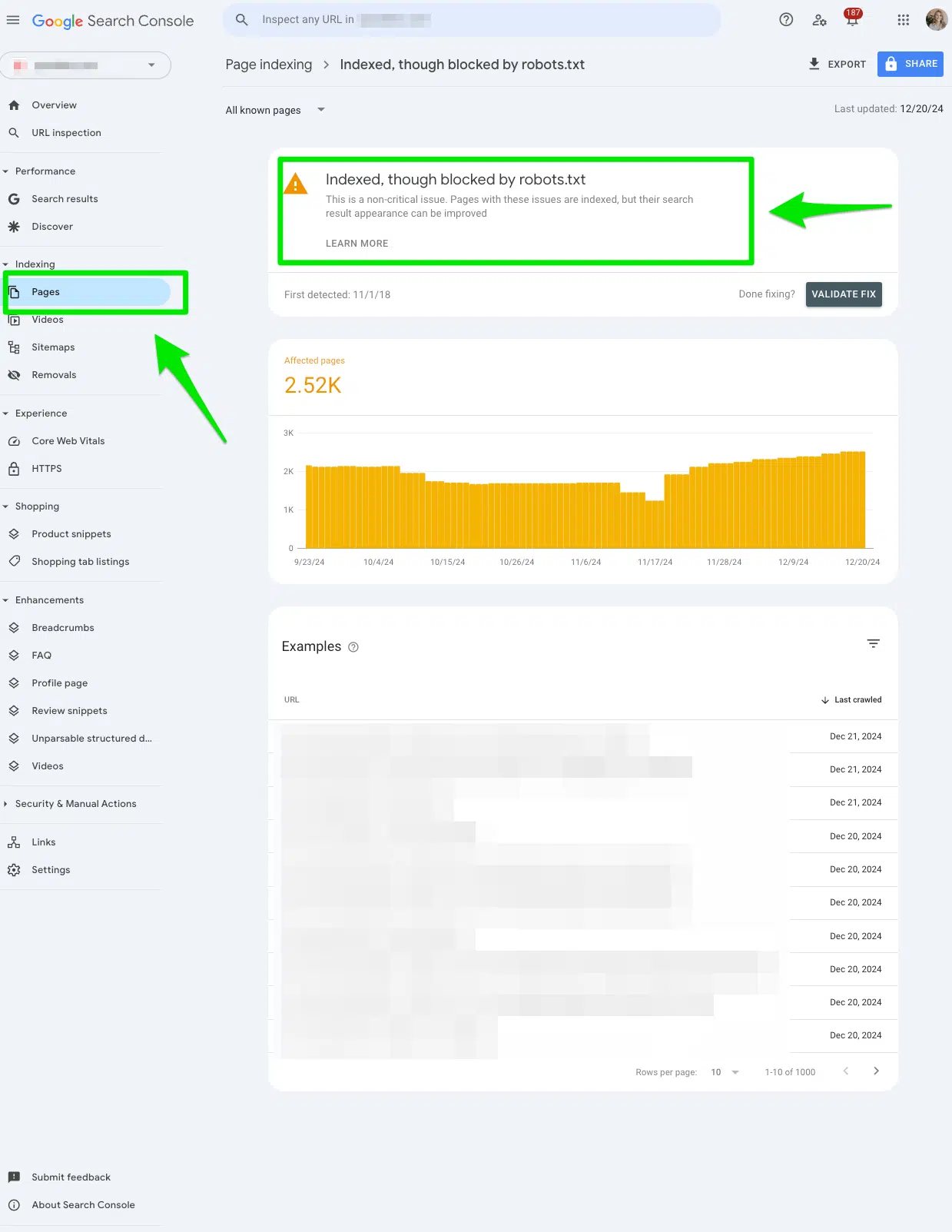

I blocked by robots in Google Search Console, although how to fix 'indexed'?

Review all the pages in the report manually 'indexed sequented, although blocked by robots'

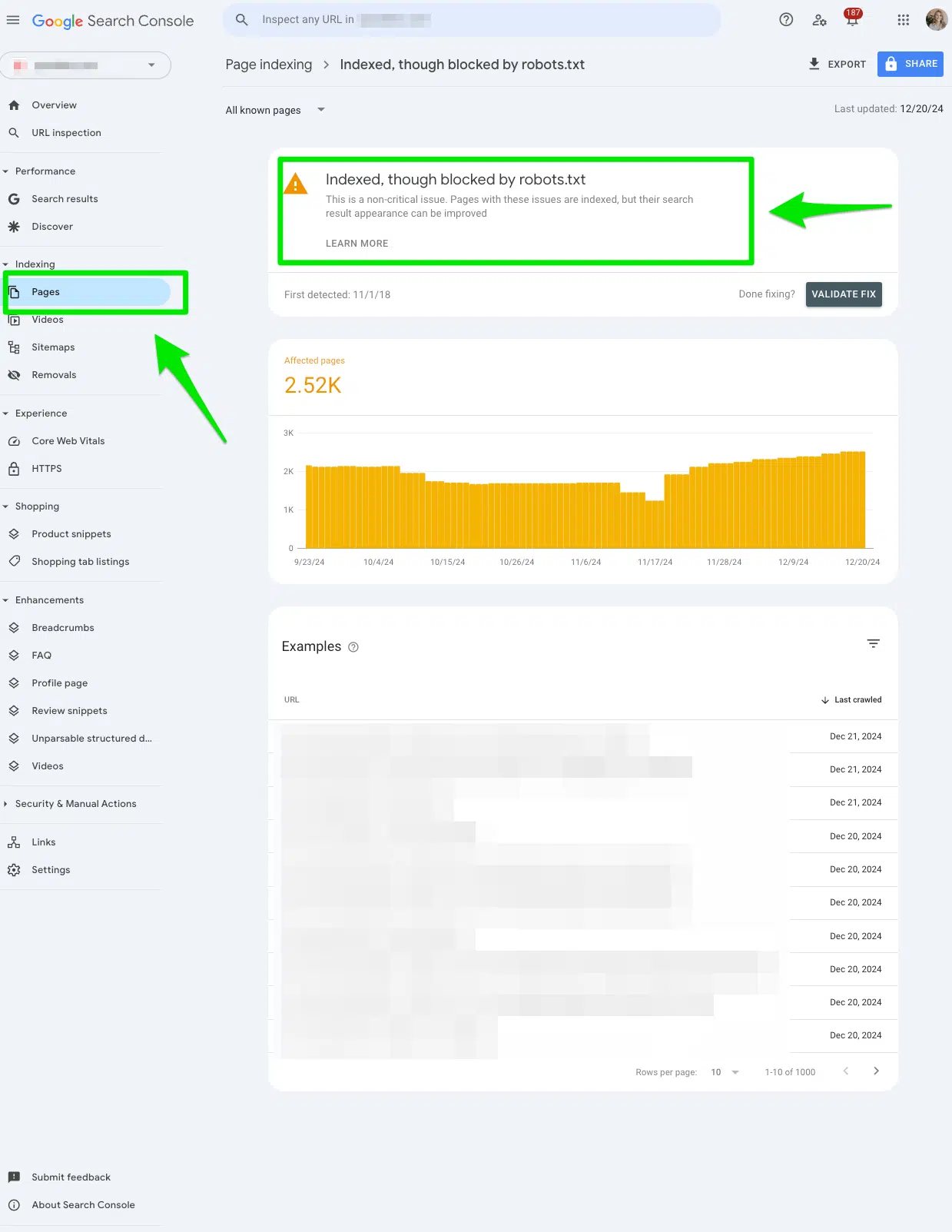

Again, jump all the pages flagged into the Google Search Console manually and manually review “indexed, although blocked by robots.txt”.

To reach the report, go to google search Console> Page> And look under the section Actually, though blocked by robots.txt.

Export data to filter the Google sheet, Excel or CSV.

Determine whether you are to block the URL from the search engine

ask yourself:

- Should this URL be really indexed?

- Is there any valuable material for people searching on the search engine?

If this URL is to be blocked by the search engine, no action is required. This report is valid.

If this URL search is not to be blocked by the engine, keep reading.

Remove the dyslo instruction from robots.txt and request to get again if you want to seize the page

If you have accidentally added an rejected instructions to an URL from the accident, then manually remove the dislmeal instructions from the robots.txt file.

After removing the dyslo instruction from the robots.txt file, submit the URL Inspection URL Google search on top of the console. Then, click Request sequencing,

Then, go to google search console, go to Settings> Robots.txt> request a request.

You want Google to fill these pages from these pages to sequin the URL and repay these pages.

Add a noindex tag if you want the page to be completely removed from the search engine.

If you do not want to index the page, consider adding a noindex tag instead of using dyslo instructions in robots.

You still need to remove the dislmeal instructions from robots.txt.

If you keep both, “indexed, although blocked by robots.txt” the error report in Google Search Console will increase, and you will never solve the problem.

Why would I add a Noindex tag instead of using a dyslo instruction in robots?

If you want a completely removed URL from the search engine, you have to include a noindex tag. Unhealthy in robots.txt file does not guarantee a page that will not be indexed.

Robots.txt files are not used to control the sequence. Robots.txt files are used to control crawling.

Should I include both a noindex tag and a rejected instructions for the same URL?

No, if you are using a noindex tag on a URL, do not reject the same URL in the robot.

You need to crawl the noindex tag to find out the search engine.

If you include the same URL under dyslo direction in robots.txt file, the search engines will have a difficult time that will be crawling to identify the URL that is noindex tag.

Creating a clear creeping strategy for your website is the way to avoid robot. Tax erro in Google Search Console

When you look at any robot. Tax error report in Google Search Console Spike, you can be wooed to rethink your stance as to why you chose to block the search engine from a specific URL.

I mean, can a URL not only be blocked from the search engine?

Yes, a URL should be blocked from the search engine for a reason. Not all URLs have thoughtful, attractive material search for engines.

For this error report in Google Search Console, natural, panacea is always to audit your pages and determine whether the material search engine is to see the eye.

Authors contributing are invited to create materials for the search engine land and are chosen for their expertise and contribution to the search community. Our contributors work under the supervision of editorial staff and contribute to quality and relevance for our readers. The opinions they express are their own.